Calibration is the process of ensuring that a measuring instrument provides accurate and reliable readings by comparing it to a known, higher-accuracy standard. This practice helps determine how closely an instrument’s measurements align with the standard and corrects any discrepancies, making sure the instrument is functioning within its specified limits.

It typically involves two main objectives: checking the instrument’s accuracy and ensuring the traceability of the measurement to a recognized standard, often a national or international one.

Beyond simple accuracy checks, calibration can also involve repairs or adjustments if the instrument is found to be out of tolerance.

Once it is complete, a report is generated, showing the instrument’s performance before and after the calibration, including any errors corrected during the process. This helps ensure the equipment delivers precise, dependable measurements throughout its use.

Table of contents

What Is Calibration?

Calibration is the process of ensuring measurement tools provide accurate results. It compares an instrument’s output with a known standard. That standard must be more accurate than the device being tested.

It helps maintain measurement accuracy over time. It corrects any drift or change in instrument performance. Without calibration, instruments can give false or misleading data.

Accurate instruments are critical in many industries. From healthcare to manufacturing, calibration plays a key role in quality and safety. Even small errors can lead to big problems.

Why is Calibration Important?

Calibration plays a crucial role in maintaining the accuracy and reliability of measuring instruments over time. Many instruments tend to drift from their calibrated settings, especially when measuring variables like temperature, humidity, or pressure.

Without regular calibration, instruments may give incorrect readings, leading to unreliable results that can cause serious issues, particularly in industries where accuracy is essential.

For example, in food safety, if a thermometer in a commercial kitchen is out of calibration, it may lead to incorrect readings when monitoring food temperatures, potentially resulting in foodborne illnesses, legal consequences, or closure orders.

Similarly, in manufacturing, improper calibration of equipment can lead to defects, production delays, and even accidents, underscoring the importance of consistent and accurate calibration.

In essence, it minimizes measurement uncertainty, ensuring that instruments provide accurate data. This is vital not only for everyday operations but also for maintaining the quality of products, safety standards, and compliance with regulatory requirements.

Purpose

The main goal of calibration is to improve reliability. Instruments used in labs, factories, and the field must give trustworthy data. Calibration ensures that.

We use it to:

- Detect measurement errors

- Correct performance issues

- Comply with industry standards

- Improve process quality

- Prevent equipment damage

For example, in the food industry, incorrect temperature readings could spoil products. In aviation, incorrect pressure readings could be dangerous. That’s why regular calibration is vital.

Key Concepts

Calibration is about comparing a device or instrument against a reference standard, ensuring the accuracy of the readings. Typically, a calibration standard is chosen to be at least four times more accurate than the instrument being tested.

t may involve adjusting the instrument to improve its accuracy, though this is often referred to as “optimizing” the device, rather than part of the typical calibration process.

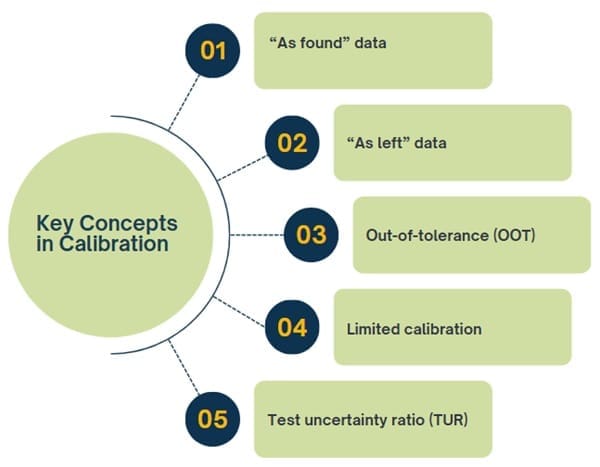

Several important terms are associated with calibration:

- “As found” data: The measurement reading taken before any adjustment of the instrument.

- “As left” data: The reading taken after the instrument is adjusted to meet the correct standards.

- Out-of-tolerance (OOT): When an instrument falls outside its specified limits, indicating that calibration or adjustment is required.

- Limited calibration: A type of calibration focusing only on specific aspects or functions of an instrument.

- Test uncertainty ratio (TUR): The ratio of the instrument’s accuracy compared to that of the reference standard used for calibration.

Key Terms in Calibration

Let’s define a few important terms:

- Zero: The lowest value in a measurement range.

- Span: The difference between the highest and lowest values.

- Range: The limits within which a device measures.

- Tolerance: The maximum allowed deviation from a true value.

- Accuracy: How close a measurement is to the actual value.

These terms are used when setting up or reviewing calibrations. Misunderstanding them can lead to mistakes.

Calibration Range vs Instrument Range

An instrument may be capable of measuring a wide range. But you might only calibrate it over part of that range.

For example, a pressure transmitter can measure 0–750 psig. However, if your process only uses 0–300 psig, you calibrate for that range. The calibration range is smaller than the instrument’s full range.

This saves time and improves focus. You only verify the part of the range that matters.

Step by Step Process of Calibration

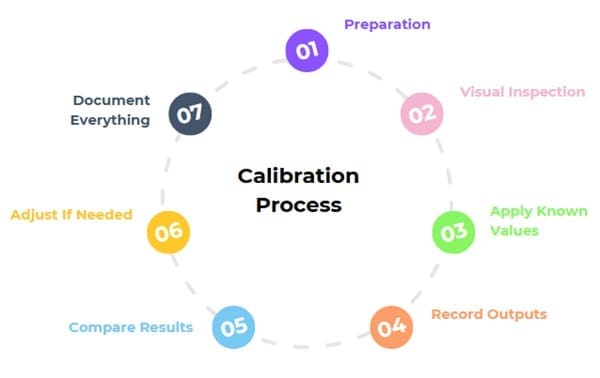

- Preparation

Collect all needed tools. This includes reference standards, documentation, and the device itself. - Visual Inspection

Check for damage or dirt. Make sure the device is in good physical condition. - Apply Known Values

Use a standard to apply known inputs to the device. - Record Outputs

Document how the device responds to each known value. - Compare Results

Compare the device’s output to expected values. Calculate errors if any. - Adjust If Needed

Adjust the zero and span settings. Re-check to confirm the fix. - Document Everything

Write down all readings, adjustments, and test details.

Calibration Best Practices

Implementing an effective calibration program is essential for any business that relies on measurement devices. It not only ensures accuracy but also helps improve overall business efficiency and reduce costs by preventing errors or defects. Below are some of the basic practices to follow for effective calibration:

- Create a calibration schedule: Each instrument should have a set calibration schedule based on manufacturer recommendations. The schedule should include timeframes for calibration and include a system for tracking calibration status.

- Use a comprehensive equipment list: A well-maintained equipment list is vital for tracking which instruments need calibration and which do not. It also helps ensure that instruments are correctly labeled, so they aren’t mistakenly used for tasks that require calibrated instruments.

- Document calibration results: It’s crucial to document all calibration procedures and outcomes. This documentation should include details such as the instrument’s “as found” and “as left” readings, calibration procedure followed, and the technician responsible for performing the calibration.

- Regularly update calibration practices: Calibration procedures should be periodically reviewed to ensure they align with current industry standards and best practices.

Errors Detected by Calibration

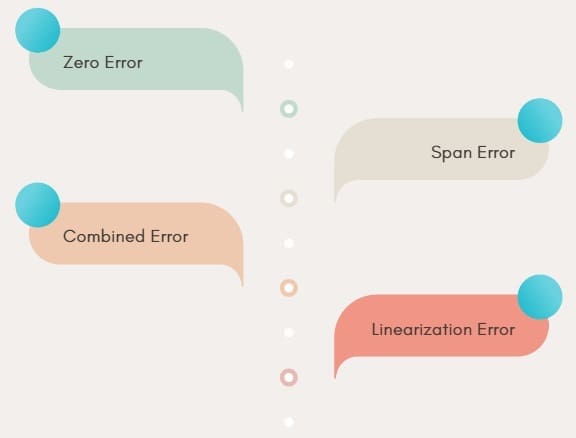

Let’s look at the types of errors calibration can reveal.

1. Zero Error

The device doesn’t start at the correct baseline. For example, at 0 input, the output is 5 mA instead of 4 mA.

2. Span Error

The device doesn’t respond correctly at full range. For example, the output at 300 psig is 21 mA instead of 20 mA.

3. Combined Error

Both zero and span are wrong. This shifts and stretches the entire range.

4. Linearization Error

The output doesn’t follow a straight line. The instrument responds inconsistently across the range.

If linear errors can’t be corrected, the device should be replaced.

Tolerance and Accuracy

Tolerance is how far a result can deviate from the true value. It’s a set limit. If a reading falls within this limit, it passes.

Accuracy is different. It’s about how close a value is to the truth. For calibration, we care about both.

Example

Say you have a pressure sensor set to measure 0–300 psig. The calibration tolerance is ±2 psig. The output should be 4–20 mA. If you apply 150 psig, the output should be 12 mA.

If the device gives 11.9 mA, it’s within ±0.10 mA tolerance. That’s acceptable. If it gives 11.5 mA, it’s out of tolerance. It needs adjustment.

Setting Tolerances

Never guess your tolerance values. Base them on:

- Process requirements

- Manufacturer specs

- Industry guidelines

- Test equipment capability

Use fixed units, not percentages. This reduces calculation mistakes.

If your process requires ±5°C and your equipment handles ±0.25°C, you might choose ±1°C as the tolerance. This ensures both accuracy and consistency.

Accuracy Ratio

The accuracy ratio compares the reference standard to the test instrument. A common recommendation is 4:1. This means the standard should be four times more accurate.

For example, if your instrument has a ±1°C tolerance, your test equipment should be ±0.25°C or better. This makes errors less likely and gives more confidence in results.

As equipment becomes more precise, maintaining this 4:1 ratio is harder. Still, aim for it when possible.

Determining Calibration Intervals

Calibration intervals—the time between each calibration—are typically determined by the equipment manufacturer based on expected drift rates of components. However, companies should consider additional factors when deciding on their calibration schedules:

- The critical nature of the measurements: For highly sensitive measurements, more frequent calibrations may be necessary.

- The history of the instrument’s performance: Instruments that frequently go out of tolerance might require more frequent checks.

- The specific needs of the operation: Some industries may require more stringent calibration schedules due to regulatory or safety concerns.

By considering these factors, businesses can tailor their calibration intervals to meet their operational needs while avoiding unnecessary downtime or excessive costs.

Importance of Traceability

Calibration isn’t useful unless it’s traceable. Traceability means you can link your calibration results back to national or international standards.

In the U.S., the National Institute of Standards and Technology (NIST) maintains these references. Other countries have their own bodies, like BIPM or UKAS.

Traceability creates a reliable chain. A lab calibrates your shop tools. That lab used higher-level equipment, which was checked against national standards.

Your role is to ensure your test equipment stays within its calibration schedule. Record its ID and date on your reports. Make sure the calibration lab used traceable equipment.

Understanding Uncertainty

Every measurement has some level of uncertainty. We can never be 100% sure, but we can estimate how unsure we are.

Uncertainty depends on:

- Test equipment accuracy

- Environmental factors

- Human error

- Instrument condition

To find total uncertainty, combine all known error sources. Use the root sum of squares method. This gives a combined uncertainty value.

ISO 17025 labs are required to calculate and document uncertainty. Even if you’re not a lab, it’s good to understand this.

Adjusting the Instrument

Most instruments allow basic adjustments:

- Zero Adjustment: Moves the entire output curve up or down.

- Span Adjustment: Changes the slope of the curve.

- Linearization Adjustment: Fine-tunes curve shape (if available).

After adjustments, repeat the test. Make sure results now meet the specified tolerance.

When to Calibrate

Calibration should not be a one-time task. Set a schedule based on:

- Manufacturer recommendations

- Usage frequency

- Environment

- Quality system requirements

Typical intervals are every 6 or 12 months. Critical instruments may need more frequent checks.

Consequences of Skipping Calibration

Skipping calibration can lead to:

- Poor product quality

- Customer complaints

- Legal non-compliance

- Equipment damage

- Safety hazards

It’s not just about numbers—it’s about trust and safety.

Industries That Depend on Calibration

- Pharmaceuticals

Ensures correct dosages and reactions. - Oil & Gas

Ensures safe pressures and flows. - Food & Beverage

Keeps temperatures and ingredients within safe limits. - Aerospace

Maintains flight safety through correct pressure and altitude readings. - Healthcare

Guarantees accurate readings from medical devices.

Role of Calibration Technicians

Calibration techs are key players in quality control. They:

- Perform scheduled calibrations

- Document results

- Adjust instruments

- Maintain traceability

- Identify and report issues

They must stay organized and follow procedures closely.

Final Thoughts

Calibration might seem like a technical formality. But it directly impacts safety, quality, and trust. It ensures the data we rely on is accurate and consistent.

Every time you take a measurement, ask yourself: “When was this last calibrated?” That single question can prevent major mistakes.